Instagram recently revealed plans to introduce a new safety feature, designed to keep teens under the age of 18, safe while using the app.

The image-sharing platform says it will soon ban adults from sending direct messages under 18 who do not follow them back.

If someone over the age of 18 does try to DM a minor who doesn’t follow them back, they will receive a notification that sending a message to that particular account is not an option.

The new safety feature will be applicable to users given that they will provide their real upon signing up to Instagram. However, the company says that they are also developing technology capable of predicting the age of users from their photos.

Instagram rules and regulations mean that only teens aged 13 and above are allowed to create an account, though its developers are well aware that children below this age regularly create accounts by lying about the year of their birth.

“To address this challenge, we’re developing new artificial intelligence and machine learning technology to help us keep teens safer and apply new age-appropriate features,” the blog reads.

Instagram is also looking into several ways to make it more difficult for adults who have been behaving suspiciously to interact with underaged teens. One method could include preventing adults from being able to see teens popping up in ‘suggested users,’ or from seeing their content on the ‘explore’ page.

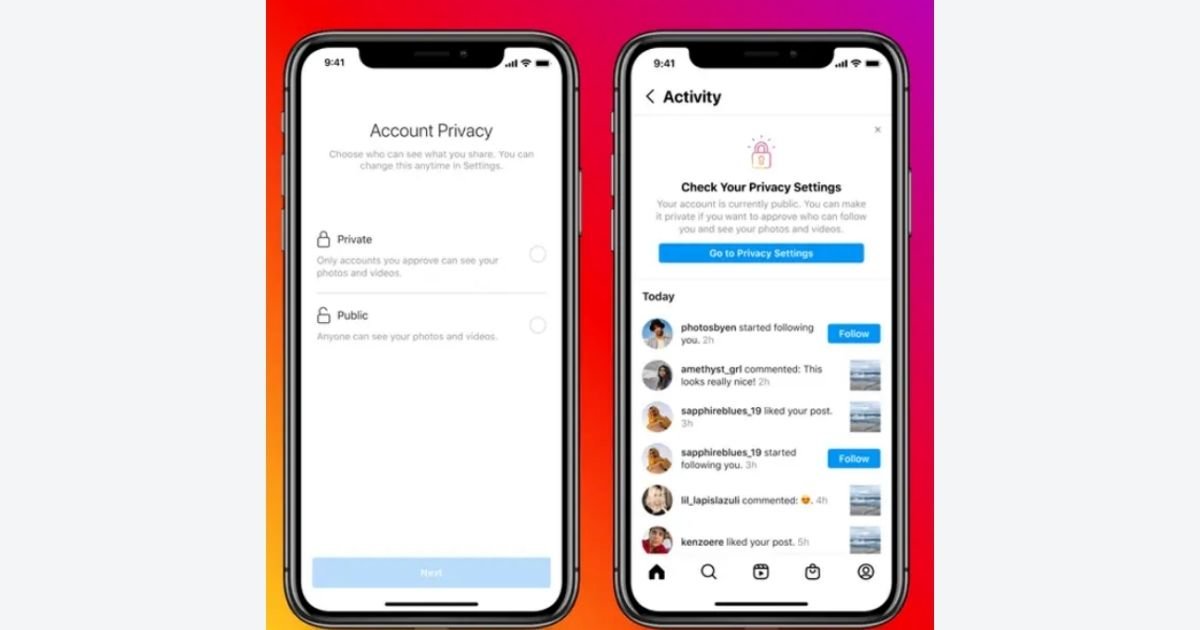

When a person signs up and creates an Instagram account, the setting is automatically set on ‘public,’ unless manually changed to ‘private.’ However, the app will now ask under 18s which they’d prefer from the beginning, explaining the difference between the two.

Overall, Instagram has committed to creating a safer space for everyone, especially those under the age of 18.